|

Classes |

| class | Mpi_DType |

| class | Mpi_DType< int > |

| class | Mpi_DType< unsigned int > |

| class | Mpi_DType< unsigned long > |

Functions |

| template<> |

| int | determine_proc_of_this_element< std::pair< id_t, id_t > > (std::pair< id_t, id_t > nodes, std::vector< int > &procs) |

| template<> |

| int | determine_proc_of_this_element< utility::triple< id_t, id_t, id_t > > (utility::triple< id_t, id_t, id_t > nodes, std::vector< int > &procs) |

| template<> |

| int | determine_proc_of_this_element< id_t > (id_t node, std::vector< int > &procs) |

| std::ostream & | operator<< (std::ostream &ostr, const utility::triple< id_t, id_t, id_t > &x) |

| std::ostream & | operator<< (std::ostream &ostr, const std::pair< id_t, id_t > &x) |

| int | set_ownership (const std::vector< id_t > &gids, std::vector< int > &owned_by, MPI_Comm comm) |

| void | compute_mgid_map (id_t num_global_gids, const std::vector< id_t > &gids, std::vector< id_t > &eliminated_gids, id_t *num_global_mgids, std::map< id_t, id_t > &mgid_map, MPI_Comm comm) |

| int | set_counts_displs (int my_count, std::vector< int > &counts, std::vector< int > &displs, MPI_Comm comm) |

| template<typename T> |

| int | determine_proc_of_this_element (T nodes, std::vector< int > &procs) |

| template<typename T> |

| int | assign_gids (std::vector< T > &elements, std::vector< id_t > &gids, std::vector< int > &elem_counts, std::vector< int > &elem_displs, MPI_Comm comm) |

| template<typename T> |

| int | assign_gids2 (bool *my_claims, std::vector< T > &elements, std::vector< id_t > &gids, std::vector< int > &elem_counts, std::vector< int > &elem_displs, MPI_Comm comm) |

| void | remove_gids (std::vector< id_t > gids, std::vector< id_t > eliminated_gids, std::vector< id_t > map_elimated) |

| int parallel::set_ownership |

( |

const std::vector< id_t > & |

gids, |

|

|

std::vector< int > & |

owned_by, |

|

|

MPI_Comm |

comm | |

|

) |

| | |

| void parallel::compute_mgid_map |

( |

id_t |

num_global_gids, |

|

|

const std::vector< id_t > & |

gids, |

|

|

std::vector< id_t > & |

eliminated_gids, |

|

|

id_t * |

num_global_mgids, |

|

|

std::map< id_t, id_t > & |

mgid_map, |

|

|

MPI_Comm |

comm | |

|

) |

| | |

Compute mapping of original gids to effective gids after elimination of some gids.

This funtion can be used to compute mapping for boundary conditions.

Must be called collectively.

- Parameters:

-

| [in] | num_global_gids | Number of global gids. |

| [in] | gids | Vector of gids used on the local processor (owned by local or remote processors) |

| [in] | eliminated_gids | Vector of gids which need to be eliminated. |

| [out] | num_global_mgids | Number of global mapped ids (mgids) on output. |

| [out] | mgid_map | Map that transforms all locally used gids to mgids, mgid = mgid_map[gid], on output. |

| [in] | comm | MPI communicator. |

- Author:

- Roman Geus

Definition at line 101 of file parallel_tools.cpp.

References set_counts_displs().

Referenced by mesh::ParallelTetMesh::set_edge_gids(), mesh::ParallelTetMesh::set_face_gids(), NedelecMesh::set_map(), and LagrangeMesh::set_map().

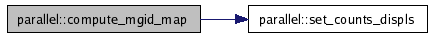

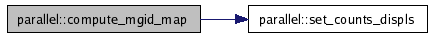

Here is the call graph for this function:

template<typename T>

| int parallel::assign_gids |

( |

std::vector< T > & |

elements, |

|

|

std::vector< id_t > & |

gids, |

|

|

std::vector< int > & |

elem_counts, |

|

|

std::vector< int > & |

elem_displs, |

|

|

MPI_Comm |

comm | |

|

) |

| | |

Assign global ids to "elements" (which are possibly shared by processors) and determine ownership of "elements".

- Parameters:

-

| [in,out] | elements | Vector of elements (like faces and edges). The vector will be sorted in-place. Each element is assumed to be unique. |

| [out] | gids | Global ids of elements. The order of the gids corresponds to the rearranged ordering of elements. |

| [out] | elem_counts | Number of elements owned by each processor. |

| [out] | elem_displs | Offsets to the block owned by each processor. |

| [in] | comm | MPI Communicator. |

- Returns:

- Number of global ids.

T needs to support comparison for equality and order.

- Author:

- Dag Evensberget

Vector the size of recvbuf telling which processor owns a particular element

Definition at line 76 of file parallel_tools.h.

References mesh::ID_NONE, rDebugAll, and set_counts_displs().

Referenced by mesh::ParallelTetMesh::set_edge_gids(), and mesh::ParallelTetMesh::set_face_gids().

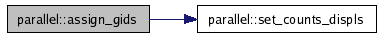

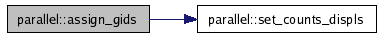

Here is the call graph for this function:

template<typename T>

| int parallel::assign_gids2 |

( |

bool * |

my_claims, |

|

|

std::vector< T > & |

elements, |

|

|

std::vector< id_t > & |

gids, |

|

|

std::vector< int > & |

elem_counts, |

|

|

std::vector< int > & |

elem_displs, |

|

|

MPI_Comm |

comm | |

|

) |

| | |

Assign global ids to "elements" (which are possibly shared by processors) and determine ownership of "elements".

- Parameters:

-

| [in] | my_claims | Boolean vector, ... |

| [in,out] | elements | Vector of elements (like faces and edges). The vector will be sorted in-place. Each element is assumed to be unique. |

| [out] | gids | Global ids of elements. The order of the gids corresponds to the rearranged ordering of elements. |

| [out] | elem_counts | Number of elements owned by each processor. |

| [out] | elem_displs | Offsets to the block owned by each processor. |

| [in] | comm | MPI Communicator. |

- Returns:

- Number of global ids.

T needs to support comparison for equality and order.

- Author:

- Dag Evensberget

Vector the size of recvbuf telling which processor owns a particular element

Definition at line 258 of file parallel_tools.h.

References mesh::ID_NONE, rDebugAll, rErrorAll, and set_counts_displs().

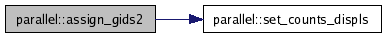

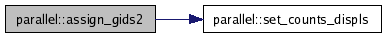

Here is the call graph for this function:

1.4.7

1.4.7